Launching an MVP: A Practical Look at Viability Milestones

- Kevin Guenther

- Mar 11, 2024

- 3 min read

Updated: Dec 11, 2025

Using an admittedly strange metaphor to discuss my approach to creating an MVP, setting up metrics and course correcting.

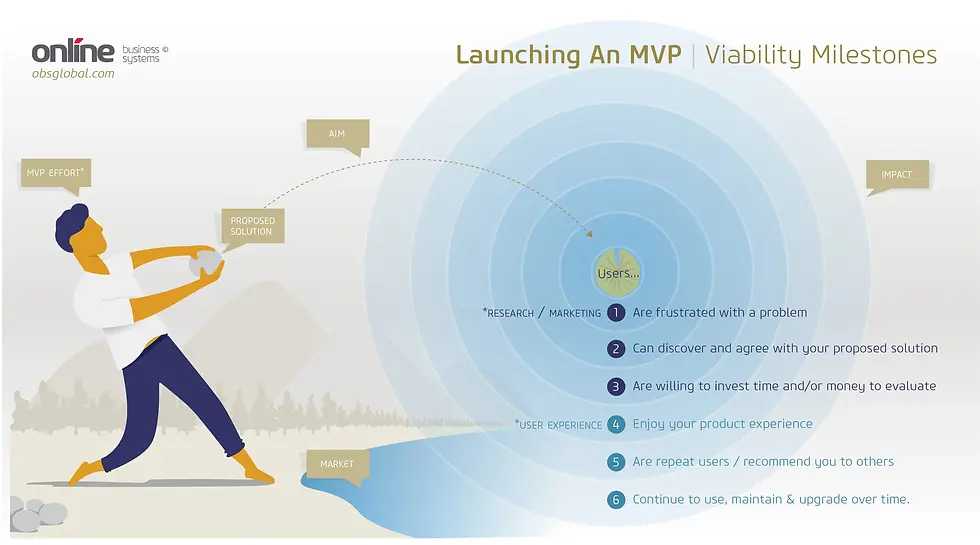

The illustration above came from an analogy I kept returning to when thinking about what it feels like to plan and launch a digital product. Picking the right stone, choosing your target, and throwing it with purpose leads to a plop or a satisfying splash. Product work isn’t that different. Preparation, practice, and the right aim determine whether users love what you’ve built and whether the business sees real value.

Tim Brown at IDEO describes the core goals of any good product or service as:

Desirable for users

Feasible to build

Viable for the business

To know whether you’ve hit those marks, you need qualitative research, a lean build-and-test loop, and clear data that connects user behavior to product decisions.

Below is a mindset and workflow that help teams get there before investing heavily in a new product or feature.

Part 1. Selecting and Launching the Stone

MVP effort: Balancing scope with resources

There’s already plenty written about MVPs, so I’ll keep this simple. The real value of an MVP is speed: getting something into the hands of real users so you can learn quickly. That’s what breaks the old waterfall habit of planning everything upfront.

Where teams often slip is treating “minimum” and “viable” as competing ideas. They’re not. Your job is to balance them. A stripped-down product that doesn’t actually test viability isn’t useful. A fully polished product that takes too long to ship misses the point.

A strong MVP should always:

Include only the capabilities required to test whether the idea solves a real problem.

Ship with analytics baked in to measure usability, churn, retention, and conversion.

Create at least one moment of delight, which helps users forgive rough edges and encourages repeat use.

Proposed solution: How well you understand the problem

Choosing what goes into your MVP depends on how well you understand your users and the problem you believe you’re solving.

It’s easier when you’re improving an existing product because you already have metrics, surveys, support tickets, and actual usage patterns. New products require more foundational research: market sizing, user interviews, observational research, and fast concept testing.

Before committing to your “stone,” make sure your business goals, technical capacity, and real user needs line up. Tools like TAM/SAM/SOM or Innovation Engineering “Yellow Cards” can help with business-side clarity. For user insight, surveys, ethnographic research, and Design Sprints will get you to a grounded starting point.

Gut instinct will get you somewhere between “not bad” and “close enough,” but without validating assumptions, you won’t know where you actually stand until it’s too late.

Aim: Reaching the right users

Once your MVP is ready, the next challenge is getting it in front of enough of the right people. A few survey respondents or waitlist sign-ups aren’t proof of market traction.

Before launch, make sure you’ve thought through:

How people will discover your product

Which channels matter most

How you’ll collect feedback and act on it

Tools like HubSpot or Marketo offer full-funnel tracking for teams with budget. Lighter options like Adjust, Mailchimp, SendGrid, and Google Analytics work well for smaller teams. Paid channels such as Meta Ads or X (Twitter) Ads also include built-in analytics.

Doing this groundwork may feel like a lot, but it’s far more effective—and faster—than lengthy requirements documents and rigid waterfall plans.

Part 2. Splash Down

Impact: What actually happened?

After launch, there’s always a short wait while the data rolls in. Early indicators show whether users:

Recognized the problem you’re solving

Found your product

Cared enough to download, sign up, or install

If things flatten after that, usability is likely the culprit. Confusion, friction, or unmet expectations will stop users from returning or recommending your product. When they do recommend you, that’s often the first sign you’re onto something meaningful.

The long-term goal is retention. However you measure engagement—daily use, weekly active users, feature adoption, update frequency—it all feeds into Customer Lifetime Value (CLV). You don’t need a perfect formula, but you do need a consistent one.

If you’re designing internal tools instead of revenue-generating products, measure efficiency, error rates, and employee satisfaction rather than retention.

Experimentation: Throwing again

Even with solid analytics, you still need qualitative insight. Watch people use your product. Observe their environment. Note where they hesitate or where they invent workarounds.

From there, build an experimentation roadmap. Form hypotheses, run structured tests, and refine. Like skipping stones, your first throw rarely lands exactly where you want it. The learning cycle is the work.

Conclusion

Launching a digital product shares a simple truth with throwing stones: the part you control is the preparation. The more often you run the process—research, build, measure, refine—the better your aim becomes. A lean approach ensures you never rely on a single throw. You always get another chance to improve and try again.

Comments